Is Blockchain Better For Processing Data Transaction And Audit Trail Than Storing Data

- Enquiry

- Open Access

- Published:

Leveraging blockchain for immutable logging and querying across multiple sites

BMC Medical Genomics book 13, Article number:82 (2020) Cite this article

Abstruse

Background

Blockchain has emerged as a decentralized and distributed framework that enables tamper-resilience and, thus, practical immutability for stored data. This immutability property is important in scenarios where auditability is desired, such as in maintaining access logs for sensitive healthcare and biomedical data. However, the underlying information structure of blockchain, by default, does not provide capabilities to efficiently query the stored data. In this investigation, we show that information technology is possible to efficiently run complex inspect queries over the admission log data stored on blockchains by using additional key-value stores. This newspaper specifically reports on the arroyo nosotros designed for the blockchain track of iDASH Privacy & Security Workshop 2018 competition. In this track, participants were asked to devise an efficient way to run conjunctive equality and range queries on a genomic dataset access log trail subsequently storing information technology in a permissioned blockchain network consisting of iv identical nodes, each representing a different site, created with the Multichain platform.

Methods

Multichain duplicates and indexes blockchain information locally at each node in a key-value store to back up retrieval requests at a later bespeak in time. To efficiently leverage the key-value storage machinery, nosotros applied diverse techniques and optimizations, such as bucketization, simple data duplication and batch loading by accounting for the required query types of the competition and the interface provided by Multichain. Particularly, we implemented our solution and compared its loading and query-response performance with SQLite, a commonly used relational database, using the data provided past the iDASH 2018 organizers.

Results

Depending on the query type and the information size, the run time difference between blockchain based query-response and SQLite based query-response ranged from 0.two seconds to six seconds. A deeper inspection revealed that range queries were the bottleneck of our solution which, notwithstanding, scales up linearly.

Conclusions

This investigation demonstrates that blockchain-based systems can provide reasonable query-response times to complex queries even if they only use simple key-value stores to manage their data. Consequently, we show that blockchains may exist useful for maintaining data with auditability and immutability requirements across multiple sites.

Background

Blockchains allow a set of parties to collaboratively maintain a collection of data on a tamper-resilient and decentralized ledger. This provides numerous benefits compared to traditional data storage models, where the administration of a shared database is delegated to either i or more than trusted entities.

I particularly notable benefit is that a blockchain-based information storage solution mitigates security bug that can arise from malicious administrators. Moreover, information technology eliminates the potential for a single point of failure considering information is replicated across multiple entities. Due to their immutability and auditability guarantees, blockchains are useful for storing access logs from multiple sites to dissimilar datasets (e.thousand., genomic inquiry information). This is considering access logs require potent auditability (east.g., auditing accesses to genomic information), transparency (e.g., publicly verifying that certain information is not misused past checking the logs) and tamper-resistance (e.one thousand., preventing an attacker from manipulating the stored logs).

Furthermore, blockchains provide access to an uniform view of information that is logged from different sites. This, in conjunction with its other properties, can be beneficial in diverse contexts as demonstrated by previous investigations, such as those in the healthcare domain [one–3].

All the same, the underlying data construction of a blockchain, by default, does not provide for an efficient technique to query the stored data. To overcome this limitation, about existing blockchain implementations, such as Bitcoin [4] and Ethereum [five], provide support for key-value stores to duplicate and store data on the blockchain. These key-value stores are then leveraged to support unproblematic key-based retrieval queries that return the associated values.

In this newspaper, we show how to leverage the key-value shop provided by a blockchain to run complex queries efficiently (east.g., range queries) on the blockchain information. We specifically report on the arroyo nosotros designed for the blockchain track of iDASH Privacy & Security Workshop 2018 competition [six]. Our findings show that our approach induces reasonable overhead, in terms query-response fourth dimension, in comparison to a traditional relational database management tool.

Blockchain

Blockchain was starting time introduced by Nakamato equally the underlying ledger of the now famous Bitcoin cryptocurrency [4]. Briefly, a blockchain is an append-only, distributed and replicated database. It allows the participants of a network to collectively maintain a sequence of data in a tamper-resilient way. More importantly, it does then without a requirement for a trusted third party by invoking a consensus machinery.

Informally, a blockchain network operates equally follows: participants broadcast their data and certain nodes called miners gather and store the data they receive in wrapper structures called blocks. Through a consensus mechanism, the network elects a leader miner in a decentralized fashion for a sequence of epochs. The epoch leader broadcast his block to the network and, having received the leaders block, other nodes store it in their local memory where each cake maintains a hash-link to the previous block.

The consensus algorithm that the blockchain network deploys may depend on whether or not the network is permissionless. For example, Bitcoin operates on a permissionless network, where anyone is free to join and there is no uniform view of the network beyond participants. It utilizes a cryptographic puzzle called Proof-of-Work [vii] to achieve consensus. This makes tampering with the order of blocks computationally infeasible when the majority of the ciphering power in the network follow the protocol honestly.

In permissioned networks however, participants can use more efficient consensus algorithms, such every bit PBFT [eight]. This is because the identity and number of participants are known to every political party.

Multichain

Multichain is a platform to deploy permissioned blockchains [9]. In this context, permissioned means that admission to the blockchain network can exist arbitrarily restricted. Such networks are usually initialized by a single party who, at a later point in time, allocates permissions to other nodes to join the network and participate in the consensus protocol. For consensus, Multichain deploys a variant of a classical Byzantine fault tolerance algorithm whose exact details are provided in the Mining in MultiChain section of the corresponding whitepaper [10].

To handle queries efficiently, Multichain provides a module chosen streams, which uses an abstraction of a dictionary (i.e., key-value store) on top of a blockchain [11]. The streams module allows a node to store an capricious datum and an associated key by submitting a key-value pair in a transaction to the blockchain. Multichain duplicates and indexes the data stored on the blockchain in LevelDB (a key-value store [12]), which is locally maintained by each node to serve queries submitted to the blockchain efficiently [13]. In other words, the streams module allows a node to collaborate with the underlying cardinal-value store. Information technology is possible to store multiple values with the same key, such that query results tin exist returned as lists.

The streams module supports the post-obit methods (among others) on top of a blockchain.

-

createDictionary(dictionary-name): Creates a dictionary with the specified proper noun.

-

insert(dictionary-name, value, key): Inserts the key-value pair to the specified lexicon.

-

retrieve(dictionary-name, key): Retrieves the value(south) corresponding to the given cardinal from the specified lexicon.

We annotation that it is possible to create an arbitrary number of dictionaries on top of a blockchain, each of which stores data independently.

Overview of the task

The blockchain rails of the iDASH Privacy & Security Workshop 2018 contest provided a genomic dataset access log trail [fourteen] in which each log consists of 7 fields: timestamp, node, ID, ref-ID, user, action and resource. The activity and resource fields can take on arbitrary string values. The other fields tin can take on arbitrary positive integer values.

This trail is stored on a blockchain, where it is assumed that the trail arrives equally a data stream (i.e., one log at a time). The contest rules dictated that the blockchain must be created using Multichain version 1.0.4 and the network must consist of four identical nodes, each representing a unlike site, initialized with default parameters per Multichain specifications. Information technology should be possible to insert and query the data using whatever node. Also, the rules prohibited the apply of using any off-chain mechanisms to handle the data other than what Multichain provides.

The goal is to develop a system that can query the blockchain efficiently under this setting while minimizing loading times and storage space. A viable solution must support two types of queries:

-

Conjunctive equality queries on selected fields.

-

A range query on the timestamp field.

Furthermore, the organisation should support the reporting of results in ascending or descending order on any field.

Methods

We at present describe the techniques and the optimizations we deployed to handle queries efficiently in our system. We note that although our organisation is explicitly tuned for the blockchain track of iDASH 2018, our methods tin can be practical to back up more full general queries.

In what follows, we first describe how to handle conjunctive equality queries. Next, we describe how to handle range queries. Nosotros then explicate how query response times can exist further improved via batch loading.

Conjunctive equality queries

For each field, we create a dictionary that uses field values every bit keys and logs as values. For example, in the user dictionary at key 1, we have the logs whose user field's value is ane. Similarly, in the ID dictionary at key 2, we accept the logs whose ID is two. Note that the logs are duplicated for each field.

When processing such a query, nosotros starting time find the virtually restrictive field fundamental and call up the logs from the corresponding dictionary with that central. Side by side, nosotros filter the retrieved logs with other field keys. Finding the most restrictive field tin can be accomplished efficiently. This is because Multichain keeps track of how many items are stored at a key in a dictionary, which tin can exist accessed by a getCount(dictionary-name, key) method.

As an case, consider a query that requests logs with u s e r=1∧ I D=2. We starting time compute ten = getCount(user-lexicon, 1) and y = getCount(id-dictionary, 2). Then if x>y, we retrieve the logs from the id-dictionary, via retrieve(id-dictionary, ii) and discard the logs whose user field is not equal to ane.

Range queries on a single field

To handle range queries, nosotros designed a bucketization technique. That is, we create intervals of a fixed size and assign each log to exactly one of those intervals depending on the queried field's value. Each interval is referred as a bucket and identified by an unique value. Particularly, our bucketization technique works every bit follows: first, we create a separate dictionary, range-dictionary, in which we assign each log the key =⌊Timestamp of log/N ⌋, where N is a predefined saucepan size.

Next, given a range query [ten,y], which requests logs whose timestamps are between x and y (inclusive), nosotros retrieve all of the logs with keys \(\lfloor {x/North}\rfloor + i, \lfloor {x/Northward}\rfloor + 2, \dots, \lfloor {y/N}\rfloor -i\). Finally, we retrieve and perform a linear scan of the logs at keys ⌊ x/N ⌋ and ⌊ y/North ⌋ and discard logs whose timestamps are not in [10,y].

Annotation that, for each log with a key in range [⌊ x/N ⌋+1,⌊ y/N ⌋−1], it is guaranteed that the log is in [x,y]. Equally a consequence, we do not demand to scan the logs stored at these keys.

Improving retrieval speed via batch loading

We observed that in the Multichain platform loading logs in batches tin substantially improve retrieval speed. Here batch loading means that, instead of inserting one log in each transaction, we buffer and insert several logs in a single transaction.

We observed that if we load logs equally batches of size one thousand, and so retrieving these logs would exist roughly k times faster than storing them 1 at a time. The competition rules required the solution to be crash-resistant, so a straightforward mode of buffering would have failed to meet this requirement. For example, if our buffer size is four, then nosotros load logs to the blockchain in batches of size 4. Yet if the system crashes after the outset ii logs arrive, then both of these logs would be lost due to the fact that the contents of the buffer was not loaded to the blockchain at the crash time.

To overcome this problem, we extend our solution to maintain two dictionaries per field, a batch lexicon and a regular lexicon. In the batch dictionary, nosotros load logs in batches, while in a regular dictionary, nosotros load the logs one at a time (i.e., a buffer size of 1). When retrieving information, we select from the batch dictionary, compare the size of the retrieved listing with the corresponding list in the regular dictionary and execute a crash recovery (if needed).

For instance, imagine a query that attempts to retrieve logs with north o d e=one. To support this query, we first remember the logs from the batch dictionary via batchLogs = retrieve(batch-node-lexicon, 1). We then compute the length of the corresponding list in the regular dictionary via logListSize = getCount(regular-node-lexicon, 1). And so, if the size of batchLogs is equal to logListSize, we only return batchLogs as the result.

Otherwise, it becomes evident that nosotros lost some information from the batch dictionary due to a crash. To recover, nosotros compute the difference betwixt the size of batchLogs and logListSize, i.east., x = logListSize - size(batchLogs). We then remember the last x items from the regular dictionary and append these to both batchLogs and the batch dictionary. Finally, nosotros return batchLogs as the result.

Results

In this section, we written report on a set of experiments designed to characterize the performance of our arrangement.

Implementation details and experiment setting

We implemented our solution using Python 3.five.2, Multichain one.0.4 and used the Savoir wrapper to interact with Multichain API [xv]. Our code is available at [xvi]. Our test setup consisted of iv identical virtual machines with the post-obit specifications: ii-Cadre CPU (2.6. GHz Intel Xeon E5), 7.5 GB of RAM and fifty GB of storage with the Ubuntu xiv.04 LTS operating system.

We used the dataset supplied by the competition organizers, which consisted of four files, i per node, in which each file has 105 logs.

From the previous discussion regarding batch loading, information technology is axiomatic that the larger the buffer size, the faster the retrieval speed. However, Multichain imposes a size limit on each transaction, such that it is not possible to increment buffer size arbitrarily. We observed that, for the given dataset, the transaction size limit is reached for a buffer size effectually x4. As a result, we prepare the buffer size to ten4.

Now, it tin can be seen that the number of buckets we have to recollect decreases with the increasing bucket size. However, the number of private logs we have to scan may increase. This is considering it depends on the distribution of logs over the buckets and the query. Notation that if ane chooses bucket size poorly, it could be the case that all the logs would go to the same bucket.

To determine an appropriate saucepan size, nosotros ran several range queries of varying sizes on the given information and measured the average running time. During our empirical analysis, we observed an increment in the boilerplate running time every bit bucket size increased from 101 to 107. After that point however, average running time started to decrease with the increasing saucepan size. As a upshot, we selected a bucket size of 107.

Finally, nosotros compared our solution's performance with a traditional relational database, namely SQLite 3.22 which we ran in one of the virtual machines. We written report on the average measures over 10 runs and illustrate standard deviations by error bars in our plots. Likewise nosotros notation that

Experiments

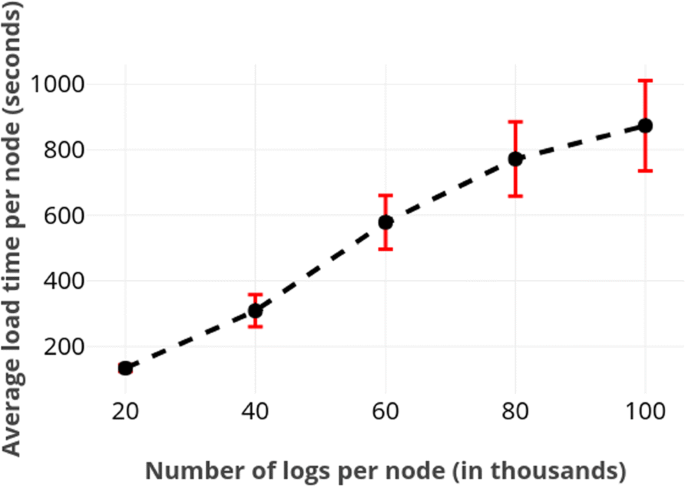

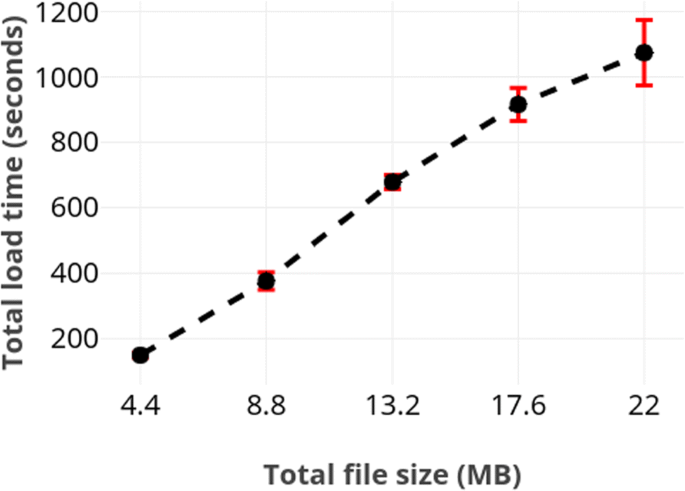

Beginning, nosotros measured the load time by using logs of various sizes meantime at each node. Figure 1 depicts the average load time of a node with respect to number of logs loaded by information technology. Nosotros further plot the influence of file size on total load time, which corresponds to the slowest node. Those results are provided in Fig. 2. These figures do not include the results from SQLite because our solution is substantially slower. For case, loading all 400.000 logs required just about iv seconds in SQLite.

Average time required for a node to load logs of various sizes. The large standard deviation is likely due to network latency. As expected, load times scale linearly with the number of the logs

Total load time required as a role of file size. As expected, the total load fourth dimension scaled linearly in the size of the file

Next, we investigated query-response times. Nosotros ran the test queries supplied past the competition organizers. The queries and the number of records returned past were as follows:

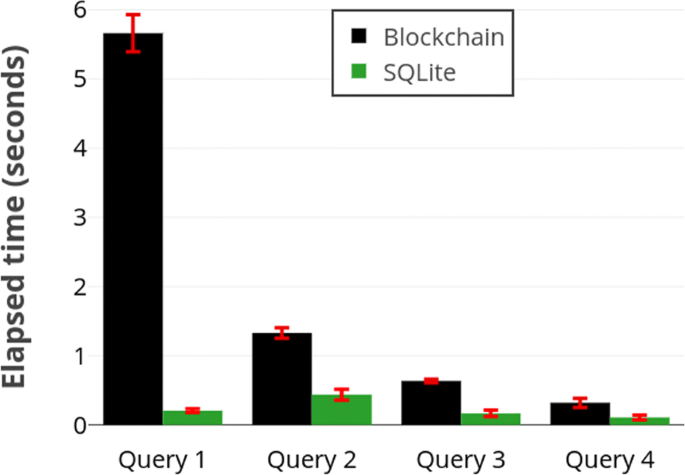

The running times for these queries are shown Fig. 3 As the results indicate, the master bottleneck of our solution is the range query. We as well annotation that SQLite internal representation and processing scheme is quite dissimilar than our method. As such, the SQLite running fourth dimension is not always highly correlated with the blockchain time.

Running times of examination queries. Query ane is a range query and the others are conjunctive equality queries. Results imply range queries dominate the performance

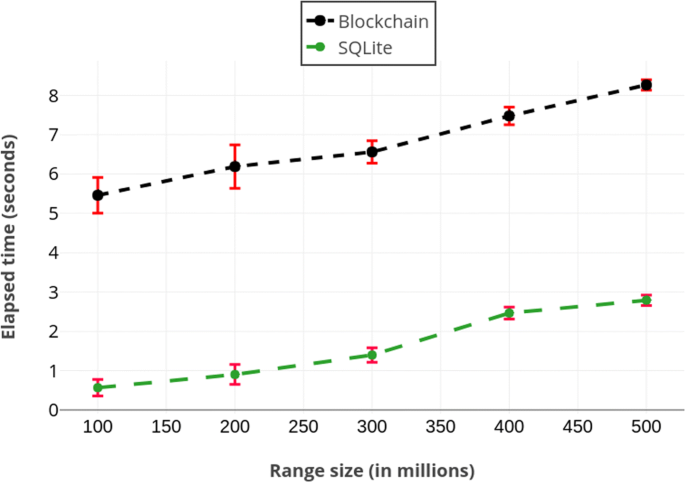

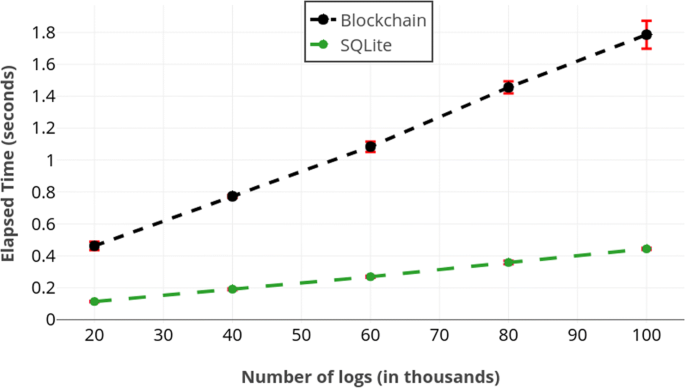

In Fig. iv, we compare the range query performance of our solution with respect to SQLite's performance. Nosotros observe both methods scale linearly where the difference is between v-6 seconds.

Running times of range queries

Finally, Fig. v illustrates how the number of records retrieved influences the query-response time. In this experiment, we ran queries without any restrictions (i.due east., query returns every stored log) after loading appropriate number of logs. Given how our approach handles conjunctive equality queries, this plot also represents the performance of conjunctive equality queries. This is due to the fact that a conjunctive equality query makes a call to getCount(.) per field given in the query in improver to retrieving the data. This only adds a negligible overhead.

Processing time required as a function of the number of retrieved records

Finally, we considered the storage requirements. Afterwards loading all logs, the size of the blockchain was about 3 GBs per node, whereas the size of the SQLite database was several orders of magnitude smaller at twenty MBs.

Discussion

In this section, nosotros hash out certain limitations and highlight opportunities for comeback of our approach.

Beginning, every bit mentioned earlier, saucepan and buffer size was based on an empirical investigation. We did not acquit extensive studies on these parameters to optimize them. Information technology might be possible to improve query-response times by fine-tuning these parameters.

2d, it is possible to map string fields (i.e., resource and activity) to the integer values to reduce the size of logs. This may improve the loading and query-response times.

Tertiary, nosotros did not consider parallelization. Although Multichain platform imposes some limitations on the parallelization (e.g., meantime reading different parts of a stream is not possible) workarounds might exist [17].

Further, per the rules of the competition, nosotros were not permitted to change the blockchain parameters. A straightforward style of improving performance might be to optimize these parameters. For example, the target-block-time parameter controls the average number of seconds between two blocks whose default value is 15. It might be possible to decrease loading times by letting the blockchain generates blocks more oft.

Finally, we note that Multichain is expected to deploy some new features to support information handling more than efficiently in hereafter versions. For case, in version 2, blockchain stores just the hashes of data [18]. Since transactions will be shortened, this will probable reduce loading and response times. Ane can simply compare the hashes of information later on fetching them from the accompanying key-value store with the hash on blockchain to ensure immutability in this model.

Determination

In this paper, we demonstrated that blockchain technology can overcome inherent limitations on querying and, thus, can be a useful tool for managing data accross multiple sites, particularly in scenarios that require stiff immutability and auditability. We showed how bucketization, simple data duplication and batch loading tin be utilized to run complex queries efficiently over blockchains that provide support for but simple key-value stores. Particularly, we implemented these notions in the submission to the blockchain track of iDASH 2018 competition that supports efficient conjunctive equality and range queries over blockchains created with Multichain platform. Nosotros illustrated that our approach induced reasonable overhead, in terms query-response fourth dimension, in comparison to a traditional relational database management tool.

Availability of information and materials

Dataset is available at [14] and our implementation is bachelor at [16].

Abbreviations

- SQL:

-

Structured Query Language

- PBFT:

-

Practical Byzantine Fault Tolerance

References

-

Ozercan HI, Ileri AM, Ayday E, Alkan C. Realizing the potential of blockchain technologies in genomics. Genome Res. 2018; 28(9):1255–63.

-

Choudhury O, Sarker H, Rudolph North, Foreman One thousand, Fay N, Dhuliawala K, Sylla I, Fairoza N, Das AK. Enforcing human subject regulations using blockchain and smart contracts. Blockchain Healthc Today. 2018;1. https://www.blockchainhealthcaretoday.com/index.php/periodical/article/view/ten.

-

Li P, Nelson SD, Malin BA, Chen Y. DMMS: A decentralized blockchain ledger for the direction of medication histories. Blockchain Healthc Today. 2019;2. https://blockchainhealthcaretoday.com/index.php/journal/article/view/38.

-

Nakamoto S. Bitcoin: A peer-to-peer electronic cash system. 2008. https://bitcoin.org/bitcoin.pdf.

-

Wood G. Ethereum: A secure decentralised generalised transaction ledger. Ethereum Proj Xanthous Newspaper. 2014; 151:ane–32.

-

iDASH Secure Genome Analysis Competition 2018, GMC Medical Genomics. 2019.

-

Jakobsson K, Juels A. In: Preneel B, (ed).Proofs of Work and Bread Pudding Protocols(Extended Abstract). Boston, MA: Springer; 1999, pp. 258–72.

-

Castro Grand, Liskov B. Practical byzantine fault tolerance and proactive recovery. ACM Trans Comput Syst. 2002; 20(4):398–461. https://doi.org/10.1145/571637.571640.

-

Multichain: An Open Platform for Building Blockchains. https://www.multichain.com/. Accessed 2 June 2019.

-

MultiChain Private Blockchain — White Paper. https://world wide web.multichain.com/download/MultiChain-White-Paper.pdf. Accessed two June 2019.

-

Introducing MultiChain Streams. https://www.multichain.com/blog/2016/09/introducing-multichain-streams/. Accessed 2 June 2019.

-

LevelDB. http://leveldb.org/. Accessed 2 June 2019.

-

How do Streams Piece of work Under the Hood?https://www.multichain.com/qa/9635/how-exercise-streams-work-nether-the-hood. Accessed 2 June 2019.

-

iDASH Privacy and Security Workshop 2018 Competition Tracks. www.humangenomeprivacy.org/2018/competition-tasks.html. Accessed two June 2019.

-

Savoir:A Python Wrapper for Multichain Json-RPC API. https://github.com/DXMarkets/Savoir. Accessed ii June 2019.

-

https://github.com/TinfoilHat0/idash2018BlockchainTrack. Accessed 2 June 2019.

-

Multiprocessing and Streams. https://world wide web.multichain.com/qa/10947/multiprocessing-and-streams. Accessed 2 June 2019.

-

2d MultiChain 2.0 Preview Release. https://www.multichain.com/blog/2018/01/2d-multichain-two-0-preview-release/. Accessed 2 June 2019.

Acknowledgments

The authors would like to thank the bearding reviewers for their effective suggestions and comments.

About this supplement

This article has been published as part of BMC Medical Genomics Volume 13 Supplement 7, 2020: Proceedings of the 7th iDASH Privacy and Security Workshop 2018. The total contents of the supplement are bachelor online at https://bmcmedgenomics.biomedcentral.com/articles/supplements/book-13-supplement-seven.

Funding

This publication was partly supported by NIH award 1R01HG006844, 1RM1HG009034, NSF awards CICI- 1547324, IIS-1633331, CNS-1837627, OAC-1828467 and ARO award W911NF-17-ane-0356.

Writer information

Authors and Affiliations

Contributions

All authors contributed equally to this piece of work. All author(s) have read and approved this manuscript.

Corresponding writer

Ethics declarations

Ethics blessing and consent to participate

Not applicable.

Consent for publication

Non applicable.

Competing interests

The authors declare that they accept no competing interests.

Boosted information

Publisher's Annotation

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open up Access This article is licensed under a Artistic Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as yous give appropriate credit to the original author(southward) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other 3rd party material in this article are included in the commodity'south Artistic Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is non permitted by statutory regulation or exceeds the permitted apply, y'all will demand to obtain permission direct from the copyright holder. To view a re-create of this licence, visit http://creativecommons.org/licenses/past/four.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/one.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the information.

Reprints and Permissions

Almost this commodity

Cite this article

Ozdayi, Yard.S., Kantarcioglu, M. & Malin, B. Leveraging blockchain for immutable logging and querying beyond multiple sites. BMC Med Genomics xiii, 82 (2020). https://doi.org/10.1186/s12920-020-0721-2

-

Published:

-

DOI : https://doi.org/ten.1186/s12920-020-0721-2

Keywords

- Blockchain

- Multichain

- Query-response

- Cross-site data sharing

Is Blockchain Better For Processing Data Transaction And Audit Trail Than Storing Data,

Source: https://bmcmedgenomics.biomedcentral.com/articles/10.1186/s12920-020-0721-2

Posted by: ellislaut2000.blogspot.com

0 Response to "Is Blockchain Better For Processing Data Transaction And Audit Trail Than Storing Data"

Post a Comment